Leetcode

link. If you are only interested in Yu's code's explanation, jump to

here:

Q:

'?' Matches any single character.

'*' Matches any sequence of characters (including the empty sequence).

The matching should cover the entire input string (not partial).

The function prototype should be:

bool isMatch(const char *s, const char *p)

Some examples:

isMatch("aa","a") → false

isMatch("aa","aa") → true

isMatch("aaa","aa") → false

isMatch("aa", "*") → true

isMatch("aa", "a*") → true

isMatch("ab", "?*") → true

isMatch("aab", "c*a*b") → false

Tag of this problem is DP + Greedy. Nice tags explain everything.

The following explanations and code pieces are mostly inspired by

link, it seems everyone is using this solution. To make things easier, I will call string

s "string", and string

p "pattern" from now on. It would be fun to have wildcard in both string and pattern, but for now we restrict wildcard to appear only in the pattern.

Basic idea:

DFS:

So we have three categories of pattern chars, normal ones (anything other than '?' and '*'), single-all matching char ('?') and the most interesting multi-matching char('*'). One can easily come up with:

bool match(string, strptr, pattern, patptr) {

if (pattern[patptr] != '*' && pattern[patptr] != '?')

return ( string[strptr] == pattern[patptr] &&

match(string, strptr+1, pattern, patptr+1) );

else if (pattern[patptr] == '?')

return match(string, strptr+1, pattern, patptr+1);

else

return ( match(string, strptr, pattern, patptr+1) || \

match(string, strptr+1, pattern, patptr+1));

}

Don't even bother trying this in the online judge system. The time complexity is definitely exponential with exponential level memory requirement.

Dynamic programming I:

With that recursive formula in mind, let's transform it to bottom-up DP. In DFS recursive, we are looking forward to chars behind us, In bottom-up DP, we are using the information we already calculated, thus we are looking backward. When a '*' appears, we match backward instead of forward. We use star to cancel the corresponding string char, pushing the problem back one string char, or we use the star to cancel nothing. Hard to explain without figures:

So the left one is using the star to cancel the current char in string, and leave the star untouched. Now the problem is traced back to whether we should use star to cancel string[i].

The right one is using the star to cancel nothing. Now the DP formula is:

bool match(stringm, strptr, pattern, patptr) {

if(memoizationTable[strptr][patptr] has any valid info)

return memoizationTable[strptr][patptr];

bool ret;

if (pattern[patptr] != '*' && pattern[patptr] != '?')

ret = ( string[strptr] == pattern[patptr] &&

match(string, strptr-1, pattern, patptr-1) );

else if (pattern[patptr] == '?')

ret = match(string, strptr-1, pattern, patptr-1);

else

ret = ( match(string, strptr-1, pattern, patptr) || \

match(string, strptr, pattern, patptr-1));

memoizationTable[strptr][patptr] = ret;

return ret;

}

Now the complexity is somewhat acceptable. With some memory optimization, one can easily come up with a solution of O(MN), O(N) complexity.

Try this with online judge system, still TLE.

Dynamic programming II:

Now that TLE assures me that this problem itself is special, conventional DP technique needs to be altered. There must be something unique here. After some careful thought, state machine and DFA stuff come back to my mind again. Check

KMP algorithm and this

post for more state machine ideas.

We have three categories, regular chars, '?' chars, and the annoying '*' chars. When the DFA is constructed, regular chars and '?' chars are all very easy to handle since one will only use this char once. The '*' char can be used for multiple times, or none at all, thus critical point is the '*' char. Say I have pattern "*a*b*c". At the first star I can use it for k1 times, second star I can use it for k2 times, The solution to this wild card problem can be represented by an array of [k1, k2, k3, ...] I can uniquely come up with one way to match the pattern to the string. Wait a sec, this is not DP, this is brute force. Where is the overlapping subproblem?

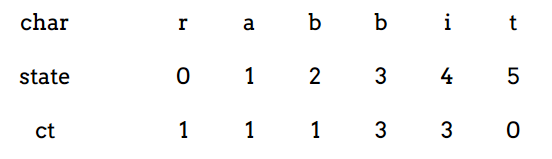

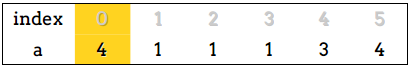

Consider this case

string: aaaaa cX

pattern: *a*a* c

While searching, suppose we are now having first star representing 1 '?', second star representing 2 '?', then when we visit the third star, we know it will fail. Next time we have first star representing 2 '?', second star representing 1 '?', when we visit the third star we can just give up. since now the pattern is aligned exactly the same as last time. So I was thinking using an offset to record this. Mark each star as one state, for each state, if an accumulative offset fails, record it, so next time it does not need to calculate again. Code looks like

this. Still fails online judge.

Dynamic programming III:

OK, more pruning is needed. First pruning, when the string pointer reaches end of string, but pattern pointer is not at the end yet, what should we do?

Case 1: If the remaining part of pattern is full of '*', we are good.

Case 2: Else, we have a mismatch. Check this (Upper one is string, lower one is pattern, this offset thing means I will use the star to represent the whole offset section in string):

So now we have a mismatch, and section b contains character that is not '*'. In this case, are we 100% sure that pattern will not match target string? Notice the only tunable parameter here is offset, which can only be increased, not decreased, since the program will try offset 0 first, then offset 1, then offset 2.... So the question is, is it possible to achieve matching between target string and pattern only by increasing offset?

No. when we increase offset, suppose we get this:

Since b is still here, now d+b string still cannot give us a pass.

So we have:

When target string matches to its end:

1. if pattern reaches its end, pattern matches target string.

2. if pattern is not at its end, but left with bunch of '*'s, pattern matches string.

3. if pattern is not at its end, with chars other than '*' left, pattern will not match string.

With this pruning, along with above dynamic programming trick,

this code piece barely passes online judge. AC time 1392ms. Yes, more than 1 sec.

Greedy:

More pruning! See I changed the section title. (This idea is borrowed from Yu's code.)

There is a very important backtracking procedure in the previous dynamic programming. Check this graph out.

In this case, since segment b will not match segment c, we have a fail. Moreover, I assume that

no matter how I tune offset 2, it will always give me a fail. Now I'm faced with this question:

Do I need to back track, and increase offset 1 such that the following situation might occur?

Answer is no.

Why? If this happens, then if I set offset 2 to

offset1'-offset1+offset2', we can use offset1 and offset2 to achieve the same goal. Here

offset1'-offset1+offset2' is definitely positive.

So we have:

When we have a mismatch, we only tune the nearest offset, if tuning the nearest offset cannot solve this problem, then pattern cannot match target string.

This means we only need to record the nearest offset. Memory space requirement O(1).

Conclusion:

Combining dynamic programming III and greedy, wildcard can be solved by using this code piece:

bool isMatch(const char *s, const char *p) {

const char* strptr = s;

const char* patptr = p;

const char* lastStrPtr = NULL;

const char* lastPatPtr = NULL;

while (*strptr) {

if (*strptr == *patptr || *patptr == '?') {

strptr++; patptr++;

}

else if (*patptr == '*') {

lastStrPtr = strptr;

lastPatPtr = patptr++;

}

else if (*strptr != *patptr) {

if (!lastStrPtr) return false;

strptr = ++lastStrPtr;

patptr = lastPatPtr + 1;

}

}

while(*patptr == '*') patptr++;

return !(*patptr);

}

AC time around 100ms. Very nice greedy approach.